Machine Learning Methods

by David Kohanbash on January 3, 2017

There are many terms that are thrown around about machine learning. But what do they all mean? and what are the differences?

Lets start with machine learning. Machine learning “gives computers the ability to learn without being explicitly programmed” (Arthur Samuel, 1959). Machine learning is a large and complex field with many algorithms that mostly operate in the approaches below.

While machine learning is great, I should remind people that many machine learning algorithms fail outside of the small domain that the algorithm was designed for. A common problem is over training the algorithms/models on a particular data set; so when the algorithms are applied somewhere else, the results are poor/wrong.

Here is a quick list of the most common types of machine learning:

Supervised Learning

Algorithms that learn from sample known data. The data is usually labeled by a human. Getting labels can be difficult, since humans with domain experience are often needed. Labeling data also takes time and can be boring. Algorithms such as decision trees and Support vector machines (SVMs) can be very accurate over complex data.

Unsupervised Learning

This is where algorithms learn with unlabeled data. Many of these algorithms do clustering (such as with K-means); and some can do anomaly detection based on those clusters. Clustering is where you automatically group data together.

Semi-Supervised Learning

Since labels can be difficult to obtain, this is a set of approaches that work with only having some of the labels. The idea is that you try the best that you can, and hope that it is good enough. One common approach is to run an unsupervised learning to generate clusters; then once you have clusters, they can be labeled for supervised learning.

Active Learning

This can be a form of semi-supervised learning. In this approach the model that is learnt examines itself and tries to find significant areas that are unknown/unlabeled (and hopefully significant). If an area of the model is unknown a human can be asked to provide a label. This can significantly reduce the amount of labels that a human needs to generate.

Reinforcement Learning (RL)

This is when the learning system improves based on feedback. A humans or some other system tells the algorithm if the output was correct or not. The machine learning algorithm can then improve based on its own performance. This is like learning to ride a bike, if you lean in the wrong direction and fall, you learn to lean the other way.

Transfer Learning

The idea with transfer learning is that we have spent many years doing machine learning and have built many models for different applications. This approach uses a prior model that is then expanded on for the new application. For example if you know how one car drives, you can use that model to build a controller for a different car. I have heard this referred to as “green learning” since it recycles old models, and recycling is a green practice.

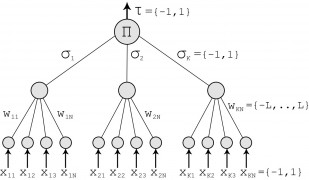

Deep Learning

Finally we get to deep learning. From all of the learning methods, the term “deep learning” probably gets abused the most. Deep learning automates finding features often in high dimensional data. Deep learning finds complex models where the features are often not-obvious to a human. This is nice for very complex data where people might not know all of the important variables. The down side is that if you need to be able to justify/explain the decisions that the model produces, you sometimes can not. The classic deep learning algorithm is the neural net.

There are two other (and probably more) approaches that need to be mentioned alongside the machine learning approaches above.

Cross Validation

There are many ways to cross validate your algorithms. The high level idea is that you use part of your data to train your machine learning algorithm, and then use the remainder of the data to evaluate if the algorithm output is correct. You can then repeat the above process changing the parts of the data used for training and validation.

Principal Component Analysis (PCA)

This is a method for making variations more visible and for showing patterns. Using PCA can let a human see which factors are important for a particular model and put the data in a form that humans can see. PCA can also be used to determine how to reduce the dimensionality (I like making of a data set.

I hope this helps you understand the difference between the many terms that can thrown around. If I missed an approach leave it in the comments below.

Main image from https://upload.wikimedia.org/wikipedia/commons/4/42/Tree_Parity_Machine.jpg

Comments

cool read! It’s great to see someone so passionate about the work they do, cheers!

Thanks!

[…] there are various time and financial restrictions with humans, machines can work 365 days 24*7. ML algorithms in robotics have been heavily incorporated by numerous organizations in a medical, automobile, electronics, […]