Software that Forms a Robot

by David Kohanbash on January 3, 2014

Hi all

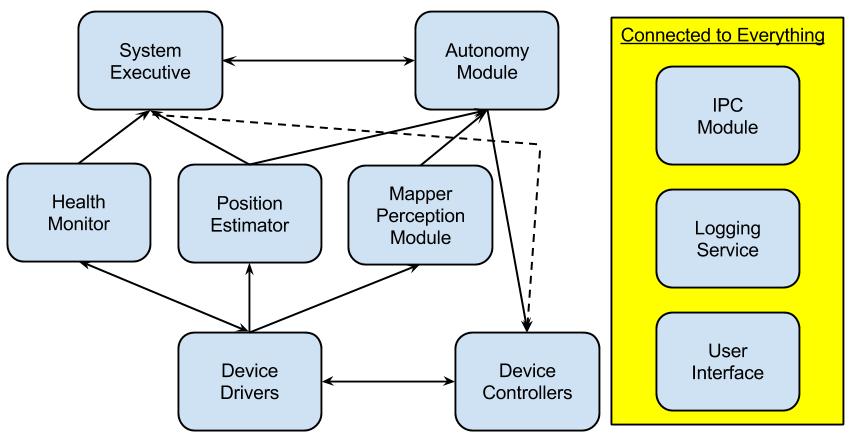

While many people find it boring, one thing that has always interested me is the software infrastructure of a robot. By infrastructure I mean the code that all robots have and that binds everything else together. This includes the things like the interprocess communication (IPC) method, the system executive, the health monitor and the user interface. I will explain those modules (or in ROS speak “nodes”) in more detail soon.

In addition to that after working on many projects I have seen that most robots develop a common structure for how they work and the modules that they contain. Different robots might use different names but they have a similar function. So here we go with the modules that form a robot.

Device Drivers

Device drivers are the base of the robot, these modules are responsible for communicating with the devices on the robot. These devices can be anything, including motor controllers, sensors, power management, instruments and payload items. It is very important that these programs work reliably since if these do not work there is no need for the rest of these modules. People often have a tendency to ignore these since “they are only drivers” and “not exciting” however these modules must be thoroughly tested and be able to handle unexpected events. Catching errors is important and should not be ignored. Using a “simple” programming style and leaving the fancy “show off” code out of the drivers is important for the reliability of the system. The robot will have one of these per device. Device controllers are sometimes its own category and sometimes combined with the device drivers. The device controllers are responsible for controlling the devices (duhh..). As an example with a motor control driver, the device driver might just accept velocity commands and execute them; the device controller then has the job of generating those velocities. For example if the higher level software/human says drive to a point 5 meters forward and 3 meters to the left, then the device controller can do the complex math (Pythagorean theorem anyone?) and issue speed and time commands until the robot reaches its destination. Another example is you might have a camera device driver that just takes pictures, and then a camera device controller can be used for dynamically adjusting the image settings or taking panoramas. The position estimator is responsible for knowing where in the world the robot is. There are many ways of doing this including GPS, RFID tags, markers and dead reckoning (combining known prior position with new sensor data and commands). Some of the common sensors for this include Inertial Measurement Units (IMU’s), inclinometers, magnetometers, gyros, and wheel encoders. One typical way of merging all of the sensor data to produce a good position estimate is with a Kalman filter. The mapper or perception module is responsible for fusing sensor data together to create a map of the environment that can then be used by the autonomy system (or sometimes the other controllers). This often relies on getting data from the position estimator to stitch the maps together. Some common sensors that need to be fused in order to build a map are laser (LIDAR), cameras, sonar, radar, and ultrasonic. Other tasks that fall in this category can be computing a stereo reconstruction from camera images, merging onboard sensing data with prior data (such as digital elevation maps (DEM’s), and machine vision classifiers to determine what you are seeing in the sensor data. The system executive is responsible for keeping track of what the robot is doing, what it should be doing, and what it should do next. This is often a high level planner that determines the overall actions of the robot while monitoring to make sure all systems are in working order. This module often has the behaviors that the robot should perform for a given situation. Sometimes parts of the autonomy and health monitor are combined into this module. It is often considered the robots “brain”. The autonomy module is responsible for planning discrete actions based on commands from the system executive. For example if the executive issues a command to drive 5km, the autonomy module must plan and determine how to get there safely. It determines how to get there by looking at the output from the map/perception module and position and then issues drive commands for the robot. This same idea applies to robotic manipulators or any other action that the robot must take. Things like A* fall into this category (I had an AI professor who routinely joked/said that most planning algorithms don’t work in real life so you must know A*) . The health monitor is in charge of monitoring sensors and other processes in order to keep the robot safe. There are many proprioceptive sensors for things such as temperature, force, voltage, and current that can be monitored. If the health monitor detects an unsafe condition or that a process crashed it should be able to safe the rover, try to recover, and alert any process (or user) that needs to know. I know you are going to ask what happens if the health monitor crashes. This depends on your system, you might have the executive or user interface handle it, or if the health monitor sends heartbeats to other systems they will be able to respond (think watchdog timer). This module is super critical and must be reliable since this is how all the process in the system communicate with each other. However they also tend to be very sensitive to the configuration files (that hopefully don’t change much once things are up and running). There are several common ways for these to work including publish-subscribe models, anonymous publish, sockets, and shared memory (at some point I will add an entire post dedicated to IPC). There are certain items you might not want to pass using your IPC system including video and images. For these large data items it is often good to save them to disk and then just pass the file path in a IPC message. You should also monitor this closely since it is often a bottleneck in the data flow. Two common IPC methods are CMU IPC which has some weirdness with operating in mixed 32bit/64bit systems (make sure to declare all integers with their lengths int32, int16, etc..) and ROS, which has a built-in IPC system. Finally we are up to logging. Logging has two primary purposes 1) For debugging and 2) for the data. Last but not least is the user interface. This is pretty self-explanatory. You need a way to control the robot and view data as needed. There are two categories of user interfaces. The first is command line; this is only for us software types (the rest of the world does not love the terminal). The second type in a proper interface that others can use. A proper interface can be anything from a hand-held joystick to a large computer stations. Whatever you choose make sure it is easy to use and provides the information that the user needs to see (and not more than the user needs to see, you can put that on another screen). There you have it. That is what makes up a robot. As I said before every robot is slightly different but they tend to have the modules described above. Do you disagree or have any additions to make? Leave your comments below.Device Controllers

Position Estimator

Mapper-Perception Module

Note: I need to point out that there is an entire group of work called simultaneous localization and mapping, or SLAM for short, that focuses on building a map AND determining your position at the same time (This would also make a good tutorial at some point but until then just check out Wikipedia on SLAM).System Executive

Autonomy Module

Health Monitor

Interprocess Communication (IPC) Module

Logging Service

1) Having data logs can be extremely important for debugging why something unexpected happened. This can be raw sensor data, error messages, or processed data. A common logger function that helps with debugging and development is the ability to playback logs.

2) Often the purpose of the robot is for the data. If the robot needs to check for toxic chemicals or find fish you want to be able to get that data back for analysis (and usually also to get paid).

You need to be really careful with logging. I have seen many cases where logging becomes the most intensive process and starts to consume lots of processor time and memory. You also must be really careful to have a logging format that makes it easy to determine what each log is (don’t rely on the back of a crumbled napkin in your pocket), and save your logs in a way that you will still be able to access them in years to come.User Interface

And with all of these modules use lots of comments in your code. When you relook at the code in a year you will need them!

Leave a Reply