Developing Trust in Autonomous Robots Seminar from Michael Wagner

by David Kohanbash on March 2, 2015

Hi all

I recently had the opportunity to hear a talk from a colleague that I have worked with many times over the last bunch of years. The talk was all about how to build safe and robust systems. This is a critical topic that we often pass-over but needs to be addressed if robots are to enter into the workforce alongside people.

I am putting a bunch of the key take away points that I pulled out from the talk below, followed by the YouTube clip of the talk. Some of the takeaway points below will have some embellishment by me.

Physical Safety

- One way to determine how close people can be to autonomous vehicle is to look at speed in which you can stop the vehicle, and the distance traveled in that time. When looking at the time to stop the vehicle you need to look at human reaction time, signal latencies, and mechanical stopping time of vehicle.

- You can improve the above time by having an independent safety critical system onboard that evaluates safety at run-time and can stop vehicle faster than a human can. I really like this idea and have done similar (more basic) type things in the past

Testing Motivation

- IEC-61508 is a key safety standard for determining how safe a system needs to be. It provides guidance on what traits map to a given safety integrity level. However, dynamically changing parameters and AI generally make a system fall into the NOT safe categories.

- For safety critical systems we need to look at the worst case scenarios (and not the nominal or best case).

- We need to test since scenarios will come that will violate assumptions that we did not even know that we made.

- “No amount of experimentation can ever prove me right; a single experiment can prove me wrong” – Albert Einstein

- Software cares about the range of the inputs and not necessarily about the duration that you run the code for. (Duration can also matter and needs to be tested for things like memory usage, and other weird things that will only show up after code is running for awhile)

- The value of a test is not the test; but how you improve the system and learn from it

Testing Approach

- Field testing is very important. Field test till errors diminish. Then simulate system for the “next million miles”

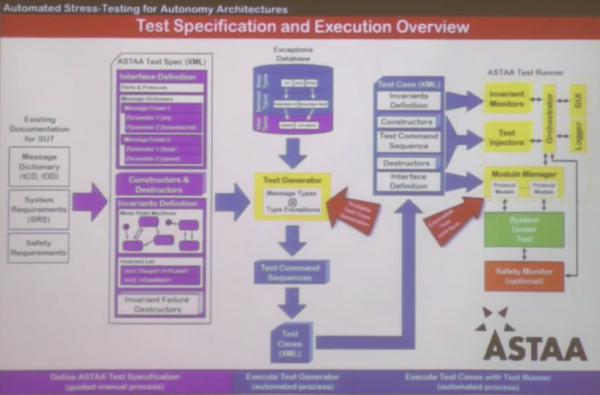

- The question become show to simulate and error check the code. One method is with robustness testing

- Robustness testing lets the software use automated methods to find the problems, so that the developers know what to fix.

- With robustness testing you can apply random inputs to the system and see what causes it to crash or hang. This lets you simulate inputs and not just scenarios.

- You can also probabilistically modify the real world inputs that are entering your system, and verify the outputs.

- Use system requirements to generate safety rules that are checked in real-time. The great thing about safety rules is that if a rule ever fails, you know something is wrong, and the code/test/robot can stop.

- You can also add a dictionary test on your code where you control the inputs to see what the outputs will be. You can build a dictionary over time so that as you continue testing the system you do not reintroduce old errors that you have seen already.

- Temporal logic is useful to create rules such as “get a message within x seconds”; as opposed to “a message must respond eventually”. Tests need to reflect that

Things that commonly fail testing:

- Improper handling of floating point numbers (out-of-bounds, NaN, infinite (inf), etc..

- Array indexing

- Memory issues. Leaking, too much specified, not enough specified, buffer overflows, etc..

- Time going backwards and old/stale data (I have seen this error more times than I can remember)

- Problems handling dynamic state

And now the part you have been waiting for; The talk:

Leave a Reply