Robot Path (Motion) Planning Overview

by David Kohanbash on December 2, 2014

This post is going to be a summary of the different path planning (ie. route finding) algorithms that are commonly used. I am not going to detail any of the particular algorithms, but rather give you a launchpad for finding a suitable algorithm for your application. You should remember that often you will mix and match different ideas as you create a motion planner for your robots. For the sake of this post I will use path planning and motion planning interchangeably, but that is not really an accurate thing to do.

The primary distinction that I will make in path planning algorithms is whether the robot knows about the global environment or not. Informed search is when the robot has the ability to create a map (using sensors) or it is provided with a pre-existing map. In uninformed search the robot is operating blind and it needs to search its way around in order to determine how to reach the goal.

One small note before we start is to remember that we want to simplify things as much as possible. This can mean making our maps as small as possible or only planning in 2D instead of 3D when possible.

Uninformed Search

BFS

Breadth First Search is a method of exploring the world where you want to make sure you cover every possible choice (hence it is “optimal”). In order to make sure you cover every possibly case you take one step at a time for example if you have a grid you can search it one row at a time. The downside to being so thorough is that it can be computationally expensive. In reality this often can not be done on a robot since if you start going down one path, you do not want to keep switching paths as it will be highly inefficient.

| 1 | 2 | 3 |

| 6 | 5 | 4 |

| 7 | G | – |

In the example above it took 7 steps to reach the goal.

DFS

Depth First Search is a good option when you want to follow each path to the end in order to find a “goal” at the end. This can be less optimal since you are not evenly covering each path, however this can be more efficient since if a goal is halfway down a column you can find it without needing to search every path. This can also be computationally easier if you find the “goal” faster then with BFS. This can be good if there is a single goal, but if there are multiple goals/paths you might miss the shorter “optimal” path.

| 1 | 4 | – |

| 2 | 5 | – |

| 3 | G | – |

In the example above it tool 5 steps to reach the goal

Bug

There is a whole class of “bug” algorithms. For bug algorithms to work you need to have 2 things: robot-to-goal heading and wall/obstacle detection. In this class of algorithms you always head towards the goal, and if you encounter an obstacle you follow the wall/obstacle until you can resume following the desired heading.

In the Bug 0 algorithm you do as mentioned above. The problem is if you do not have memory of where the robot has been, you can end up doing loops and never reaching the goal. So the Bug 1 algorithm is like Bug 0 but also retains a memory of where it has been. In Bug 1 you record at each point how close you are to the goal as you circle an obstacle, and then go back to the closest point when trying to reach the goal. In Bug 2 instead of fully circling an obstacle to determine the shortest path to a goal, you can be greedy and head off from that path as soon as you think you found a good shot to the goal (while still retaining memory so that you do not end up doing loops).

Informed Search

In the prior examples of uninformed search the robot needed to explore the world in order to determine a path. Now when we have a map which can be provided in advance such as in the case of a known world, or if we can make a map by using onboard sensors we can “intelligently” determine an appropriate path for the robot to travel.

A*

A* is probably the most common path planning algorithm. The idea is simple, the implementation is simple (relative to other algorithms), and it can work well. I took an Artificial Intelligence (AI) course where the professor said if we learn one thing from the class it should be A*, and if any of us does not know A* at the end of the class we should fail the class.

In A* you can create a rule (or a “heuristic”) that determines the cost (or difficulty) of getting from a current position to the desired goal. This rule is often based on many things and can be up to you. In some cases you can change the cost function dynamically based on other heuristics (maybe do one thing when indoors and another thing when outside). For example you can say that:

[blockquote]cost=distance + (slopeAngle/someNumber) + (difficultyOfTerrainValue)[/blockquote]

There are many ways to figure out the path to the goal. The most common are to discretize the map into a grid and then determine a cost from grid box to grid box, followed by summing all of those costs to find the path with the lowest cumulative cost. The other way is to determine various nodes (points) in your map and then determine the cost between each of those points, which can then be summed for a total path cost.

Often if you have a grid as your map the first option above makes sense. However as an example if you are trying to plan a path between cities, it might make sense to treat each city as a node and not create a grid over all of the cities or use RRT’s (see below).

D*

D* improves upon A* and is good for cases where parts of the world/map are unknown and are being discovered. D* allows path costs to change dynamically so that the overall goal can chance as the robot is in motion for better performance. This can be critical for a robot working in an environment where there is unknown and the world is changing (such as people walking around).

D* initially starts at the goal and works back to the start location, so a block in the map adjacent to the goal would have a value of 1 and then the next farthest from the goal is 2, etc…

There are several heuristics that D* uses, each heuristic value is given to each cell in the map. The first value h is a raw estimate of distance to goal assuming no obstacles are in the path. The second value k is an estimate of the shortest path length to the goal. Both of these values will keep getting updated as the robot learns about the world and obstacles are detected.

There are many version of D* that are all optimized a bit different to work in real systems. Some of these include Field D*, Anytime D*, and D* Lite.

D* Lite is not strictly based on D* however it uses the same ideas as D*. D* Lite is often what is actually used in robots since it often has better performance than the basic D*.

RRT

Rapidly Exploring Random Tree is a useful approach for exploring and finding a path in difficult and crowded environments. The basic idea is that you randomly place n points (where n can be a large number) on a map. You then start from a point (where the robot is) and then start trying to connect the dots from your current point to the nearest point. You then connect each of those points to their nearest points. This lets you build a map of possible routes. One nice thing with this approach is that having a grid (such as in A*) is not that important.

There are a bunch of strategies for “randomly” placing the n points. Some of them include:

1. Uniformly placing the points

2. Placing more points near obstacles

3. Placing more points in narrow corridors (since often you will not get enough points in a narrow corridor to plan a path)

4. Bias points on the path towards the goal

See here for an Adaptive version of an RRT https://robotsforroboticists.com/adaptive-rrt-arrt-code/

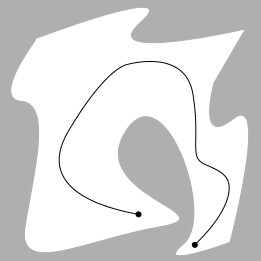

PRT

Probabilistic Roadmaps are nice since you can use probabilities to dynamically explore the map. The basic idea is that from your current location you generate a random point (biased towards the goal) and try connecting a line to that quasi-random point. You then check if the line is valid and only occupies free space without crossing into obstacles. Many of the strategies for placing points with the RRT can also be applied to PRT’s.

Above is the basic implementation. In my prior work I have made the distance from the current point to the random point that is connected with a line a dynamic parameter. I increase the line distance when I have had many successful line connections. When many lines are failing due to crossing into obstacles I decrease the distance that the random point is put at. Another adaptation I have made is by dynamically changing the biasing term to control how often PRT tries to put the point towards the goal, and how often it puts the point in a different direction.

A good book for learning more about path planning is Principles of Robot Motion by Howie Choset and a bunch of other people. It has a good discussion on A* and D* in the appendix.

Leave a Reply